Distributed Benchmark

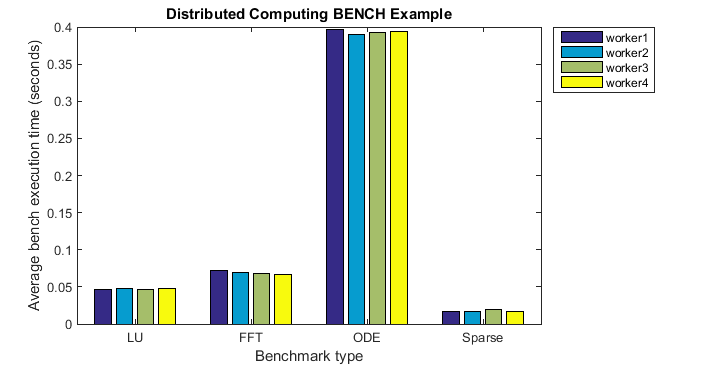

This example runs a MATLAB® benchmark that has been modified for Parallel Computing Toolbox™. We execute the benchmark on our workers to determine the relative speeds of the machines on our distributed computing network. Fluctuations of 5 or 10 percent in the measured times of repeated runs on a single machine are not uncommon.

This benchmark is intended to compare the performance of one particular version of MATLAB on different machines. It does not offer direct comparisons between different versions of MATLAB. The tasks and problem sizes change from version to version.

For details about the benchmark, view the code for pctdemo_task_benchview the code for pctdemo_task_bench.

Prerequisites:

Related examples:

Load the Example Settings and the Data

The example uses the default profile when identifying the cluster to use. The profiles documentationprofiles documentation explains how to create new profiles and how to change the default profile. See Customizing the Settings for the Examples in the Parallel Computing Toolbox for instructions on how to change the example difficulty level or the number of tasks created.

Because this example uses callbacks, we also verify that we have an MJS cluster object to use, rather than one of the other cluster types.

[difficulty, myCluster, numTasks] = pctdemo_helper_getDefaults(); if ~isequal(myCluster.Type, 'MJS') error('pctexample:benchdist:NotMJS', ... ['This example uses callbacks, which are only available with ' ... 'an MJS cluster.']); end fprintf(['This example will submit a job with %d task(s) ' ... 'to the cluster.\n'], numTasks);

This example will submit a job with 4 task(s) to the cluster.

We will repeat the benchmark count times, and run a total of numTasks benchmarks on the network. Because we cannot control which workers execute the tasks, some of them may be benchmarked more than once. Also, note that the example difficulty level has no effect on the computations we perform in this example. You can view the code for pctdemo_setup_benchview the code for pctdemo_setup_bench for full details.

[fig, count] = pctdemo_setup_bench(difficulty);

Create and Submit the Job

We create one job that consists of numTasks tasks. Each task consists of executing pctdemo_task_bench(count) and calling pctdemo_taskfin_bench when it has completed. The task finished callback collects the task results and stores them. It also updates the plot with all the results obtained so far. You can view the code for pctdemo_task_benchpctdemo_task_bench and pctdemo_taskfin_benchpctdemo_taskfin_bench for the details.

job = createJob(myCluster); for i = 1:numTasks task = createTask(job, @pctdemo_task_bench, 1, {count}); set(task, 'FinishedFcn', @pctdemo_taskfin_bench, ... 'UserData', fig); end

We can now submit the job and wait for it to finish.

submit(job); wait(job);

Retrieve the Results

As the tasks finish, the task finished callback function collects the task results and updates the output figure. Therefore, we do not need to perform any plotting here, and we simply verify that we obtained all the results we were expecting. fetchOutputs will throw an error if the tasks did not complete successfully, in which case we need to delete the job before throwing the error.

try [~] = fetchOutputs(job); catch err delete(job); rethrow(err); end

We have now finished all the verifications, so we can delete the job.

delete(job);