Generating Random Numbers on a GPU

This example shows how to switch between the different random number generators that are supported on the GPU and examines the performance of each of them.

Random numbers form a key part of many simulation or estimation algorithms. Typically these numbers are generated using the functions randrand, randirandi, and randnrandn. Parallel Computing Toolbox™ provides three corresponding functions for generating random numbers directly on a GPU: gpuArray.randgpuArray.rand, gpuArray.randigpuArray.randi, and gpuArray.randngpuArray.randn. These functions can use one of several different number generation algorithms. The generators available on the GPU are listed, described, and their performance measured below.

Discovering the GPU random number generators

The listlist method of parallel.gpu.RandStreamparallel.gpu.RandStream provides a short description of the available generators:

parallel.gpu.RandStream.list

The following random number generator algorithms are available: MRG32K3A: Combined multiple recursive generator (supports parallel streams) Philox4x32-10: Philox 4x32 generator with 10 rounds (supports parallel streams) Threefry4x64-20: Threefry 4x64 generator with 20 rounds (supports parallel streams)

Each of these generators has been designed with parallel use in mind, providing multiple independent streams of random numbers. However, they each have some advantages and disadvantages:

CombRecursive (also known as

MRG32k3a): Matches the MATLAB® generator of the same name and produces identical results given the same initial state. This generator was introduced in 1999 and has been widely tested and used.Threefry4x64-20 : New generator introduced in 2011 but based on the existing cryptographic ThreeFish algorithm, which is widely tested and used. This generator was designed to give good performance in highly parallel systems such as GPUs.

Philox4x32-10 : New generator introduced in 2011, specifically designed for high performance in highly parallel systems such as GPUs.

All of these generators pass the standard TestU01 test suite (see "TestU01: A C library for empirical testing of random number generators" by Pierre L'Ecuyer and Richard Simard, ACM Transactions on Mathematical Software, Vol. 33, No. 4, August 2007.)

Changing the default random number generator

The function parallel.gpu.rngparallel.gpu.rng can store and reset the generator state. It can also switch between the different generators that are provided. Before changing the generator, we keep the existing state so that it can be restored at the end of these tests:

oldState = parallel.gpu.rng;

parallel.gpu.rng(0, 'Philox4x32-10');

disp(parallel.gpu.rng)

Type: 'Philox4x32-10'

Seed: 0

State: [7x1 uint32]

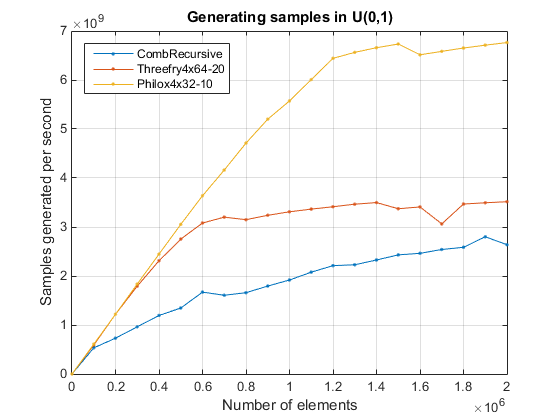

Generating uniformly distributed random numbers

Uniformly distributed random numbers are generated on the GPU using either gpuArray.randgpuArray.rand or gpuArray.randigpuArray.randi. In performance terms, these two functions behave very similarly and only rand is measured here. gputimeitgputimeit is used to measure the performance to ensure accurate timing results, automatically calling the function many times and correctly dealing with synchronization and other timing issues.

generators = {'CombRecursive', 'Threefry4x64-20', 'Philox4x32-10'};

sizes = ([1, 100000:100000:2000000])';

samplesPerSec = nan(numel(sizes), numel(generators));

for g=1:numel(generators)

% Set the generator

parallel.gpu.rng(0, generators{g});

% Loop over sizes, timing the generator

for s=1:numel(sizes)

fcn = @() gpuArray.rand(sizes(s),1);

time = gputimeit(fcn);

samplesPerSec(s,g) = sizes(s)/time;

end

end

% Plot the result

plot(sizes, samplesPerSec, '.-')

legend(generators, 'Location', 'NorthWest')

grid on

xlabel('Number of elements')

ylabel('Samples generated per second')

title('Generating samples in U(0,1)')

The results clearly highlight some major performance differences. Threefry4x64-20 is much faster than CombRecursive, and Philox4x32-10 is much faster than Threefry4x64-20.

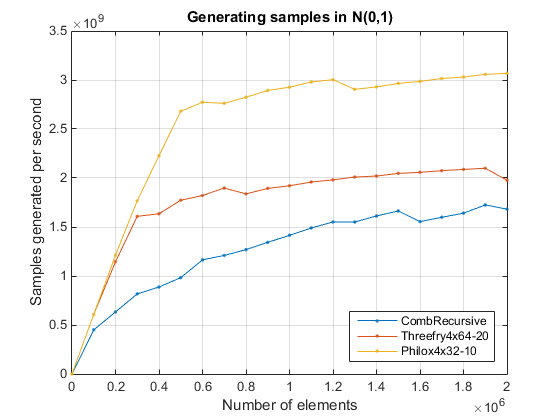

Generating normally distributed random numbers

Many simulations rely on perturbations sampled from a normal distribution. This is done using gpuArray.randngpuArray.randn.

samplesPerSec = nan(numel(sizes), numel(generators)); for g=1:numel(generators) % Set the generator parallel.gpu.rng(0, generators{g}); % Loop over sizes, timing the generator for s=1:numel(sizes) fcn = @() gpuArray.randn(sizes(s),1); time = gputimeit(fcn); samplesPerSec(s,g) = sizes(s)/time; end end % Plot the result plot(sizes, samplesPerSec, '.-') legend(generators, 'Location', 'SouthEast') grid on xlabel('Number of elements') ylabel('Samples generated per second') title('Generating samples in N(0,1)')

As with the uniform test, Threefry4x64-20 is faster than CombRecursive, and Philox4x32-10 is faster than Threefry4x64-20. The extra work required to produce normally distributed values reduces the rate at which values are produced by each of the generators and also reduces the performance differences between them.

Before finishing, restore the original generator state:

parallel.gpu.rng(oldState);

Conclusion

In this example, the three GPU random number generators have been compared. Each provides some advantages (+) and has some caveats (-).

CombRecursive

(

+) CPU version also available for comparison(

+) Long track record in real-world usage(

-) Slowest

Threefry4x64-20

(

+) Fast(

+) Based on well known and well tested Threefish algorithm(

-) Relatively new in real-world usage(

-) No CPU version available for comparison

Philox4x32-10

(

+) Fastest(

-) Relatively new in real-world usage(

-) No CPU version available for comparison