Parallel Computing in Optimization Toolbox Functions

Parallel Optimization Functionality

Parallel computing is the technique of using multiple processors on a single problem. The reason to use parallel computing is to speed computations.

The Optimization Toolbox™ solvers fmincon, fgoalattain,

and fminimax can automatically distribute the

numerical estimation of gradients of objective functions and nonlinear

constraint functions to multiple processors. These solvers use parallel

gradient estimation under the following conditions:

You have a license for Parallel Computing Toolbox™ software.

The option

GradObjis set to'off', or, if there is a nonlinear constraint function, the optionGradConstris set to'off'. Since'off'is the default value of these options, you don't have to set them; just don't set them both to'on'.Parallel computing is enabled with

parpool, a Parallel Computing Toolbox function.The option

UseParallelis set totrue. The default value of this option isfalse.

When these conditions hold, the solvers compute estimated gradients in parallel.

Note: Even when running in parallel, a solver occasionally calls the objective and nonlinear constraint functions serially on the host machine. Therefore, ensure that your functions have no assumptions about whether they are evaluated in serial or parallel. |

Parallel Estimation of Gradients

One subroutine was made parallel in the functions fmincon, fgoalattain,

and fminimax: the subroutine that estimates the

gradient of the objective function and constraint functions. This

calculation involves computing function values at points near the

current location x. Essentially, the calculation

is

where

f represents objective or constraint functions

ei are the unit direction vectors

Δi is the size of a step in the ei direction

To estimate ∇f(x) in parallel, Optimization Toolbox solvers distribute the evaluation of (f(x + Δiei) – f(x))/Δi to extra processors.

Parallel Central Differences

You can choose to have gradients estimated by central finite differences instead of the default forward finite differences. The basic central finite difference formula is

This takes twice as many function evaluations as forward finite differences, but is usually much more accurate. Central finite differences work in parallel exactly the same as forward finite differences.

Enable central finite differences by using optimoptions to

set the FinDiffType option to 'central'.

To use forward finite differences, set the FinDiffType option

to 'forward'.

Nested Parallel Functions

Solvers employ the Parallel Computing Toolbox function parfor to perform parallel estimation

of gradients. parfor does not work in parallel

when called from within another parfor loop.

Therefore, you cannot simultaneously use parallel gradient estimation

and parallel functionality within your objective or constraint functions.

Suppose, for example, your objective function userfcn calls parfor,

and you wish to call fmincon in a loop. Suppose

also that the conditions for parallel gradient evaluation of fmincon,

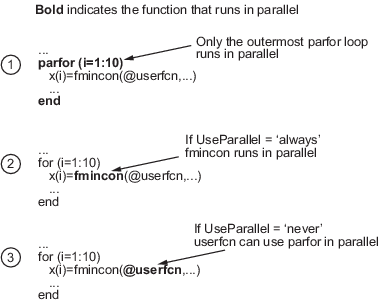

as given in Parallel Optimization Functionality, are satisfied. When parfor Runs In Parallel shows three

cases:

The outermost loop is

parfor. Only that loop runs in parallel.The outermost

parforloop is infmincon. Onlyfminconruns in parallel.The outermost

parforloop is inuserfcn.userfcncan useparforin parallel.

When parfor Runs In Parallel